Taylor Swift AI-generated explicit photos just tip of iceberg for threat of deepfakes

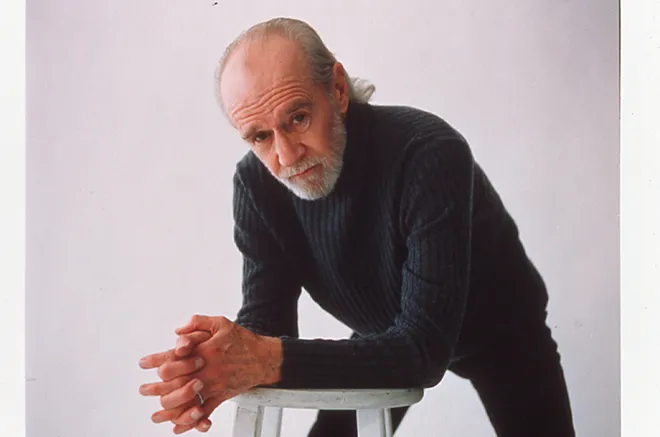

In the news over the past few days, you might have seen that George Carlin released a new standup comedy special, that explicit photos were taken of Taylor Swift and celebrities like Steve Harvey hawking Medicare scams on YouTube.

Except these didn't actually happen − they were all faked using artificial intelligence. An AI-generated George Carlin audio special has drawn a lawsuit from his estate, filed Thursday. The same day, deepfaked pornographic images of Swift circulated on X, formerly Twitter, were viewed millions of times before being taken down by the social media site. YouTube said it terminated 90 accounts and suspended multiple advertisers for faking celebrity endorsements. These fakes have drawn fierce criticism, but they're hardly the first celebrities to be recreated with AI technology, and they won't be the last.

And the AI problem is only going to get worse as the technology improves every day while the law drags behind.

Taylor Swift AI pictures controversy symbolizes greater threat

“Computers can reproduce the image of a dead or living person,” says Daniel Gervais, a law professor at Vanderbilt University who specializes in intellectual property law. “In the case of a living (person), the question is whether this person will have rights when his or her image is used." (Currently only nine U.S. states have laws against nonconsensual deepfake photography.)

Josh Schiller, an attorney for Carlin estate, said in a statement that their lawsuit is "not just about AI, it's about the humans that use AI to violate the law, infringe on intellectual property rights, and flout common decency." It's one of many lawsuits in the courts right now about the future of artificial intelligence. A bipartisan group of U.S. senators has introduced legislation called the No Artificial Intelligence Fake Replicas And Unauthorized Duplications Act of 2024 (No AI FRAUD). Supporters say the measure will combat AI deepfakes, voice clones and other harmful digital human impersonations.

Last summer the use of AI was a key sticking point in negotiations between striking Hollywood actors and writers unions and the major studios. The unions fought for strict regulations for AI usage by media corporations, and feared the technology could be used to replace human labor. "AI was a deal breaker," SAG-AFTRA president Fran Drescher said after the actors' strike was resolved. "If we didn’t get that package, then what are we doing to protect our members?"

The pace at which the technology advances is exponential, and society will likely have to reckon with many more hyper-realistic but fake images, video and audio. “The power of computers doubles every one to two years,” says Haibing Lu, associate professor of Information Systems & Analytics at Santa Clara University. “Over the next 10 years, it is going to be amazing.”

It's already hard to tell what's real and what's not, making it difficult for platforms like YouTube and X to police AI as it multiplies.

"We are aware of a growing trend of ads, videos and channels that use celebrity likenesses in an attempt to scam or deceive users, and have been investing heavily in our detection and enforcement against these practices," YouTube said in a statement. "This effort is ongoing and we continue to remove ads and terminate channels.”

It's only a matter of time before there will be no way to visually differentiate between a real image and an AI-generated image. "I'm very confident in saying that in the long run, it will be impossible to tell the difference between a generated image and a real one," says James O'Brien, a computer science professor at the University of California, Berkeley.

"The generated images are just going to keep getting better."

More:SAG-AFTRA President Fran Drescher on AI

Contributing: Chris Mueller, Taijuan Moorman, USA TODAY; The Associated Press

Disclaimer: The copyright of this article belongs to the original author. Reposting this article is solely for the purpose of information dissemination and does not constitute any investment advice. If there is any infringement, please contact us immediately. We will make corrections or deletions as necessary. Thank you.